AI

AI

AI

AI

AI

AI

Facebook Inc. today revealed that it’s going all-in on PyTorch as its default artificial intelligence framework.

The company said that by migrating all of its AI systems to PyTorch, it will be able to innovate much more quickly while ensuring a more optimal experience for all of its users.

PyTorch is an open-source machine learning library that’s based on the Torch library. It was created by Facebook’s AI Research unit, and it’s used to power a wide range of AI applications already, such as computer vision and natural language processing models. Examples of PyTorch AI models include the personalization of user’s feeds and stories on Instagram, and those that identify and delete hate speech on Facebook.

“We’re at that inflection point for PyTorch,” Facebook Chief Technology Officer Mike Schroepfer said in a press briefing. “We’re standardizing all of our workloads on PyTorch.”

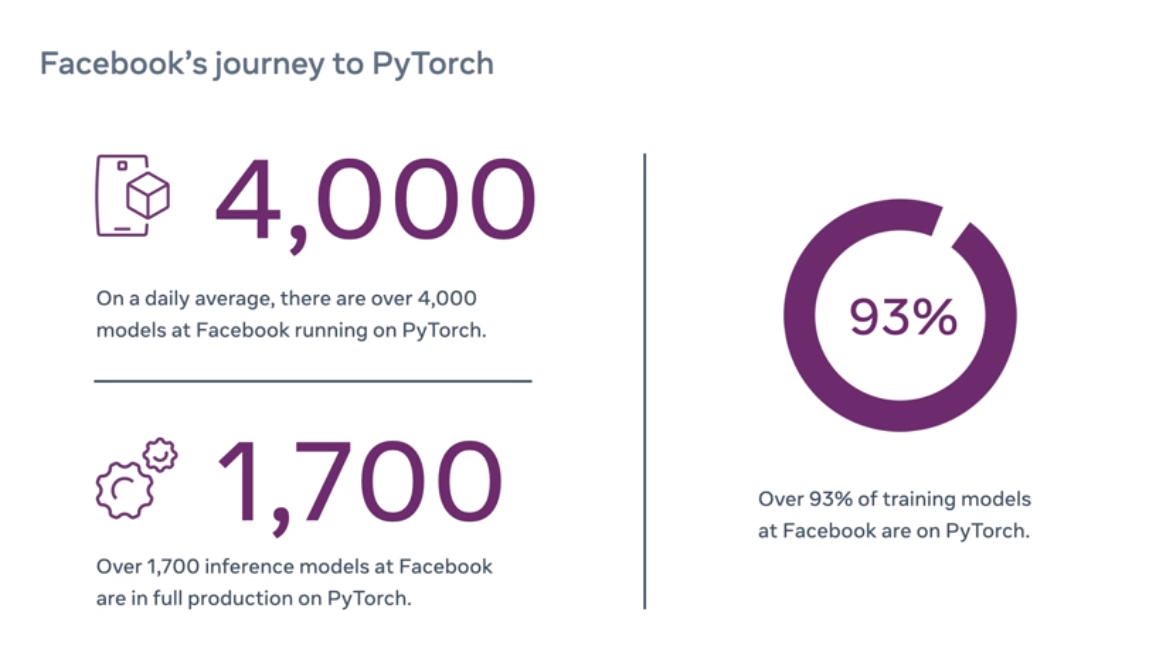

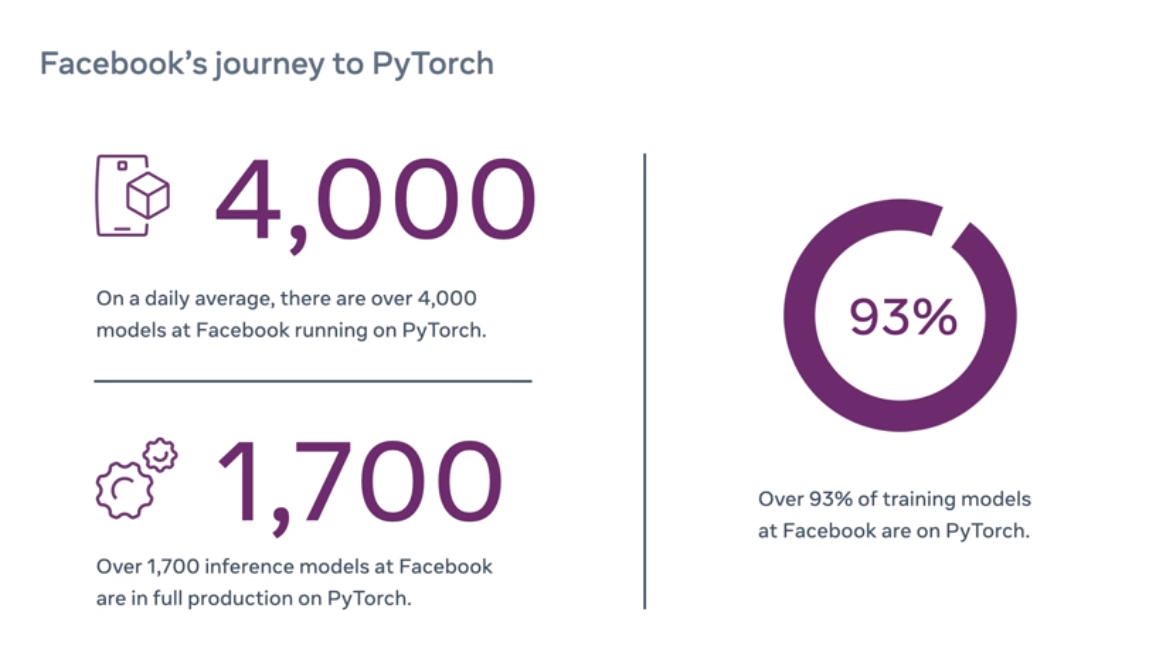

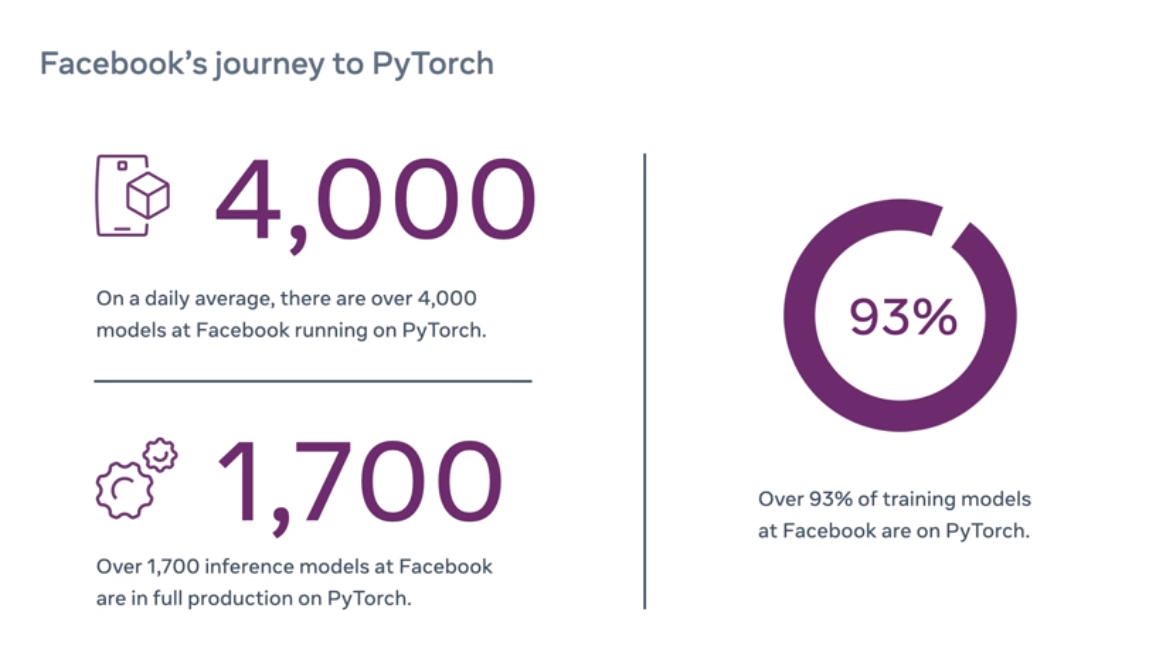

In a blog post, Facebook said the migration has been going on for year and that it has already moved most of its AI tools to PyTorch. It said it has more than 1,700 PyTorch-based inference models up and running across its site and services. In addition, 93% of its new AI training models used for identifying and analyzing content on Facebook are based on PyTorch too.

One of the reasons for moving to PyTorch is that AI’s research-to-production pipeline has traditionally been tedious and complex, Facebook said. It involves multiple steps and tools and fragmented processes, and there’s a lack of clear standardization to manage end-to-end workflows. Another problem is that researchers were forced to choose among AI frameworks that were optimized either for research or for production, but not both.

Facebook’s AI team created PyTorch with one key factor in mind, namely its usability. One of the advantages of the PyTorch 1.0 release in 2018 was that merged research and production capabilities into a single AI framework for the first time, Facebook explained.

“This new iteration merged Python-based PyTorch with production-ready Caffe2 and fused together immediate and graph execution modes, providing both flexibility for research and performance optimization for production,” Facebook wrote in its blog. “PyTorch engineers at Facebook introduced a family of tools, libraries, pretrained models, and data sets for each stage of development, enabling the developer community to quickly create and deploy new AI innovations at scale.”

In other words, Facebook is choosing PyTorch because it’s a single framework for both research and production AI models that provides flexibility to experiment and also the ability to launch AI at large scale when it’s ready for prime time. That makes it possible to deploy new models in minutes rather than weeks, Facebook said, while also reducing the infrastructure and engineering burden that comes with maintaining two different AI systems.

Another benefit of PyTorch is that Facebook’s developers don’t have to go through the tedious process of reimplementing models each time they’re updated to ensure their performance remains consistent.

Facebook Product Manager Cornelia Carapcea said in the briefing that hate speech models are a good example of the speed and simplicity PyTorch affords. Previously, such models took months to build and then ship into production.

“PyTorch allows us to work on these big models and can understand all integrity issues in a single model,” Carapcea said. “Basically, it fast-tracks this whole cycle of building models.”

Schroepfer noted that PyTorch isn’t actually any faster than the Caffe 2 AI framework, rather the advantage is that it’s just much easier to use.

“PyTorch is good enough in terms of performance for production and it’s easier not to have to switch when going into production,” he said. “It’s just a massive accelerant to what we do.”

PyTorch also has an advantage when it comes to running AI models directly on devices such as smartphones. That’s because Facebook has created the PyTorch Mobile framework that reduces runtime binary sizes to ensure PyTorch AI models can run on devices with minimal processing power. Examples include augmented reality experiences such as AI-powered shopping, which rely on having the lowest latency possible to provide better experiences.

One final advantage of shifting to PyTorch is that Facebook will be the ability to work more closely with the wider open-source community.

With reporting from Robert Hof

THANK YOU