Today we're addressing one of the most frequent discussion topics surrounding the new RTX 2080 and RTX 2080 Ti graphics cards. As you already know from our reviews, these cards do not offer great value right now. The vanilla 2080 offers GTX 1080 Ti-like performance at a higher price, while the RTX 2080 Ti is the fastest card on the market, but carries a ~80% price premium over the 1080 Ti for about 30% more performance.

But the question or even just blanket statement we keep seeing pop up relates to DLSS. Is it worth buying the RTX 2080 for DLSS?, or is DLSS the killer feature for the RTX cards? As with ray tracing, we won't really know until we have more to test with, but today we're doing an early investigation into DLSS using the current DLSS demos we have within reach.

We weren't going to cover DLSS so soon because there are only two Nvidia-provided demos available that allow us to see DLSS in action. Neither of them are actual games, instead they are canned benchmarks, so it's not really the best way to explore and analyse DLSS. But we do see a lot of questions out there, so ultimately we decided it warranted an early investigation.

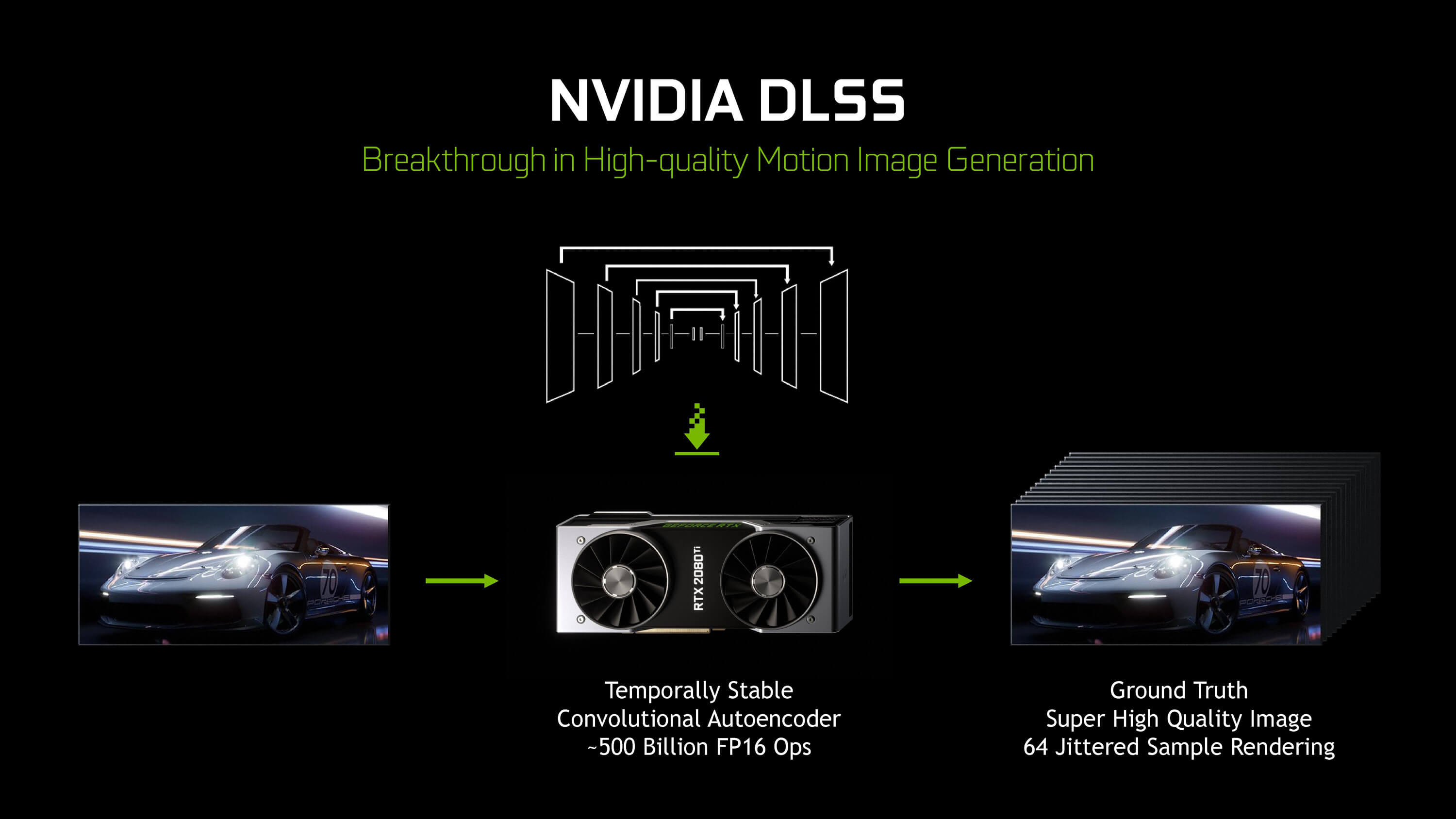

DLSS stands for "deep learning super sampling," and it's a new rendering technology that only works with GeForce RTX graphics cards. There are two DLSS modes, but we can only test one of them right now, the standard DLSS mode, so it's the only one we'll be focusing on.

While the name does have "super sampling" in it, the standard DLSS mode isn't really super sampling, it's more of an image reconstruction technique that renders a game at a sub-native resolution, then uses AI inferencing to upscale and improve the image. And of course, the AI processing element of DLSS is only possible thanks to Turing's tensor cores.

So what DLSS aims to provide is a 4K image equivalent to a native 4K presentation, except with higher performance than you'd otherwise get through native rendering. This is possible because at 4K, DLSS is actually rendering the game at approximately 1440p, so with 2x fewer samples, and then upscaling it using an AI network trained on a 64xSSAA reference imagery.

You could think of it as an advanced upscaling technology similar to checkerboard rendering or temporal rendering, techniques game consoles like the PS4 Pro use to run games at "4K" while actually rendering them at lower resolutions. Theoretically, DLSS fixes a lot of the issues with checkerboard rendering, making it more suitable for PC gaming where the artefacts generated through checkerboarding are much more noticeable.

Visual Quality and Fidelity

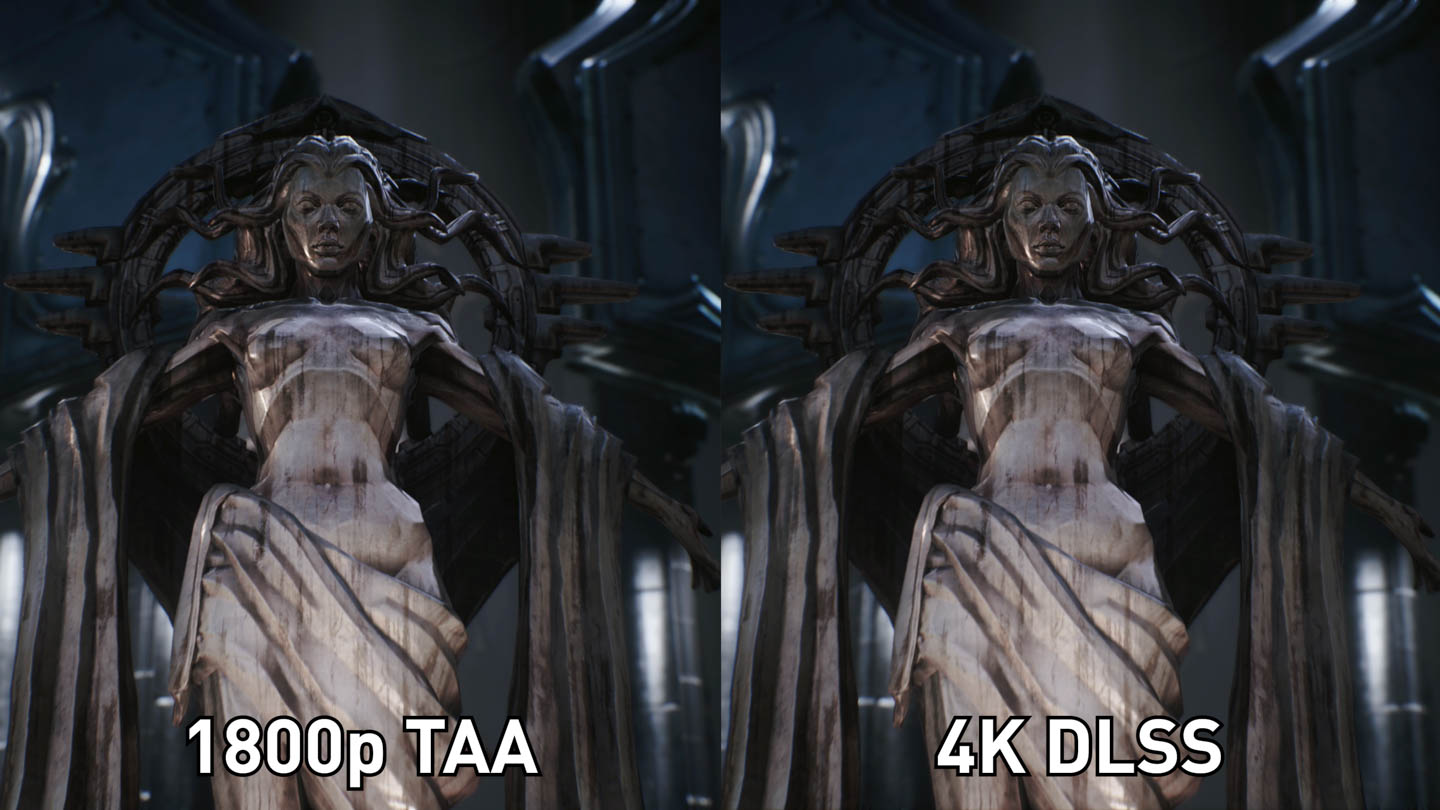

We highly recommend clicking through to view the full 4K resolution comparison images

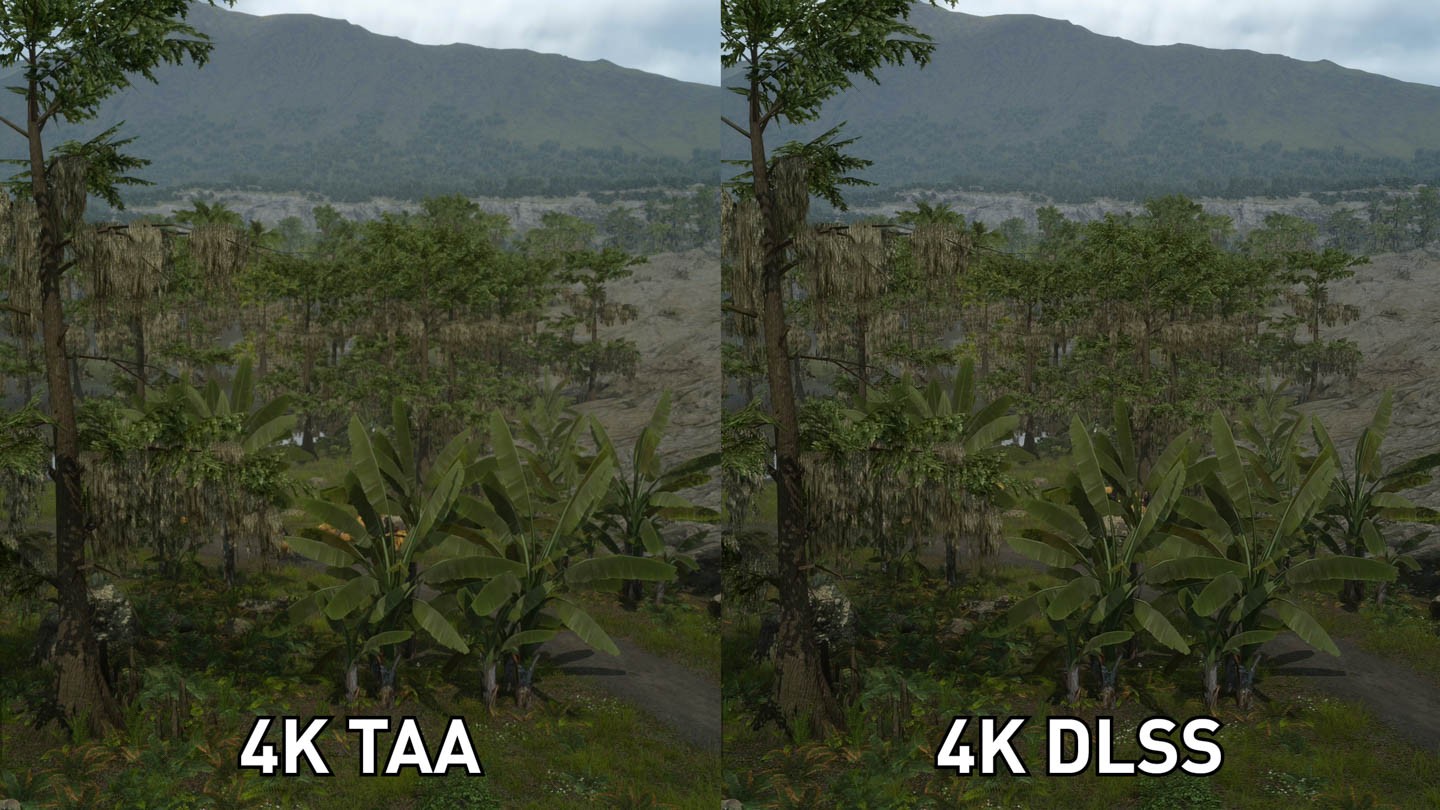

Let's get into some quality comparisons, starting with the Final Fantasy XV benchmark. First up we have a direct comparison of native 4K with temporal anti-aliasing, or TAA, up against DLSS at 4K. It's an interesting comparison to make, because in some situations, DLSS provides superior image quality to the native 4K TAA presentation, such as with distant trees in some shots.

However for the most part, the 4K TAA image is better, giving you improved fine detail in a lot of the various scenes, particularly the car at the beginning of the benchmark run, the grass a bit later on, and the close up food shot towards the end. The differences in quality range anywhere from very hard to spot, to quite noticeable on a 4K display.

It seems that DLSS struggles with a lot of fine, high-resolution texture work that the native image provides, especially in more static shots. However, as DLSS doesn't have a temporal component, this reconstruction technique finds it easy to clean up moving shots where TAA either blurs the image, or introduces artefacts, which is why in some aspects it's superior.

DLSS, at least in this benchmark, is pretty close to the native 4K presentation, but it's definitely not the same as native 4K, and those with high resolution displays will be missing out on the extra detail you get with a true native presentation.

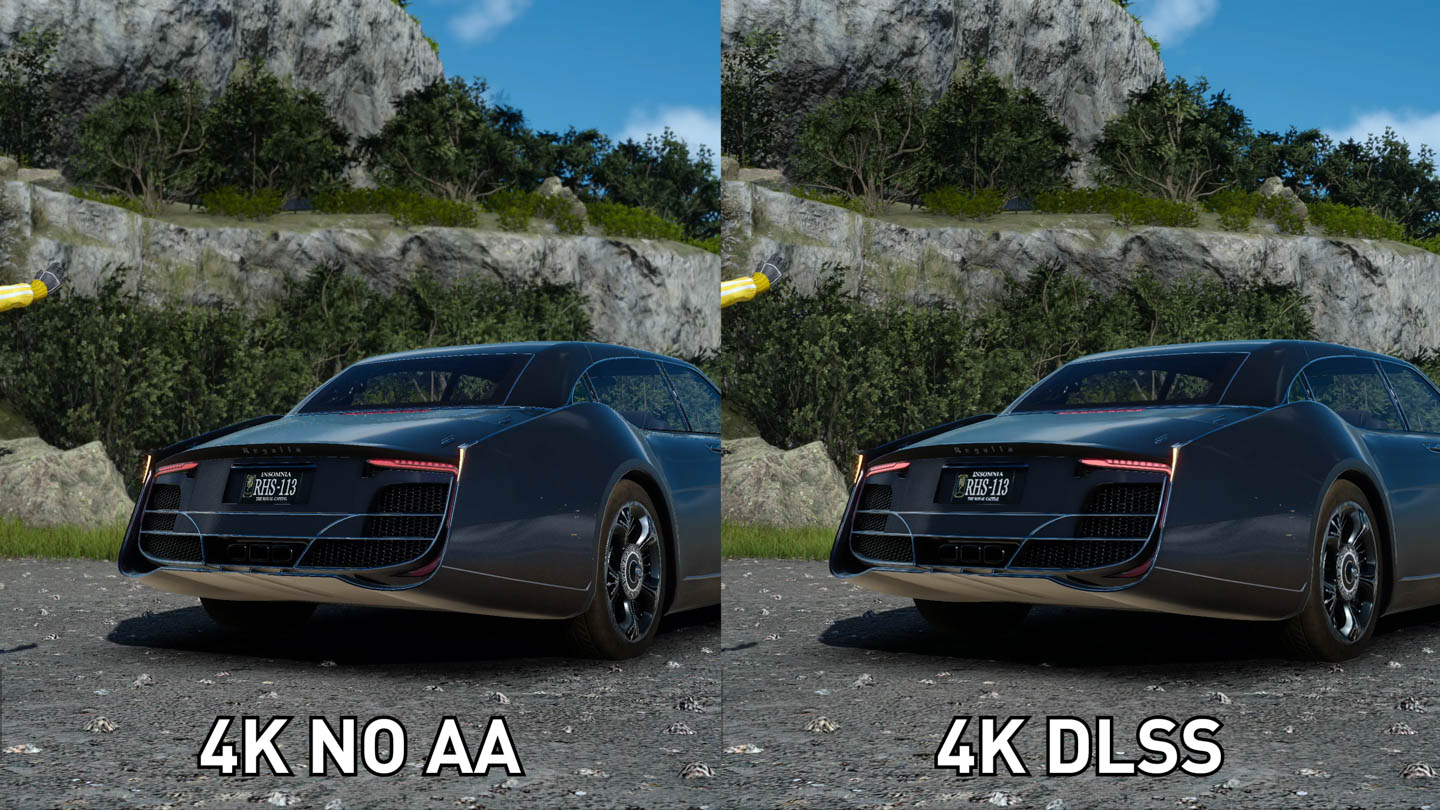

Of course, DLSS vs 4K TAA is the stock standard comparison Nvidia suggests. So I decided to look at a few other comparisons, starting with DLSS vs a 4K image with no anti-aliasing.

Right away you'll spot a ton of aliasing artefacts on the 4K no-AA image, which is to be expected and I wouldn't suggest people play with AA disabled. It's especially bad on the character's hair and grass.

But the no-AA image does produce noticeably sharper and clearer texture quality, when you look at, say, the middle of an object where there are few jaggies or artefacts. Distant tree quality as well has now improved to the point where the no-AA presentation is equivalent to DLSS, rather than inferior to DLSS.

And this begins to highlight one of the key issues with Final Fantasy XV's anti-aliasing techniques: they are rubbish. The TAA implementation in this game is terrible, it's most comparable to a blur filter rather than a decent AA implementation that preserves fine detail while smoothing out edges and removing shimmering. Enabling TAA in this game simply wipes out a lot of the fine texture detail you'd otherwise get at 4K, and completely destroys scenes during motion.

I'm not the biggest fan of TAA in most games, but it is possible to get right. Shadow of the Tomb Raider for example is a game with decent TAA that softens edges without significantly reducing texture quality. But in this particular game, TAA... well it sucks, to be honest.

Because Final Fantasy XV's TAA is so bad, the game isn't a great comparison between a native 4K image, and DLSS. A lot of games use far better anti-aliasing techniques, either with better TAA, or techniques like SMAA, or a combination of both. So it's all well and good to say, DLSS looks pretty close to FFXV's native 4K TAA presentation, but how will it compare to the majority of games with better anti-aliasing and better native 4K? Well, it's hard to say for certain, but I'd certainly expect the gap in image quality to widen.

That's not to say DLSS is going to be a bust, there's certainly a place for a rendering technique that provides better performance for a small reduction in image quality. After all, we already have quality settings for a whole range of other effects. But I don't think the FFXV demo is great for judging that exact quality reduction for most games.

There is one very nice thing to highlight: 4K DLSS is far superior to a native 1440p presentation. As you might recall, DLSS is upscaling from 1440p to try and imitate 4K. Well at a native 1440p, textures and fine detail are noticeably blurrier when upscaling that image to 4K ourselves, whereas using DLSS for upscaling is like a magic filter that cleans up everything.

Even applying a sharpening filter using ReShade to try and... I guess cheat a bit and clean up the blurry 1440p TAA image, it's still not as clean as the DLSS image, though it is better than the regular non-sharpened 1440p presentation.

Some Early Benchmarks and More Comparisons

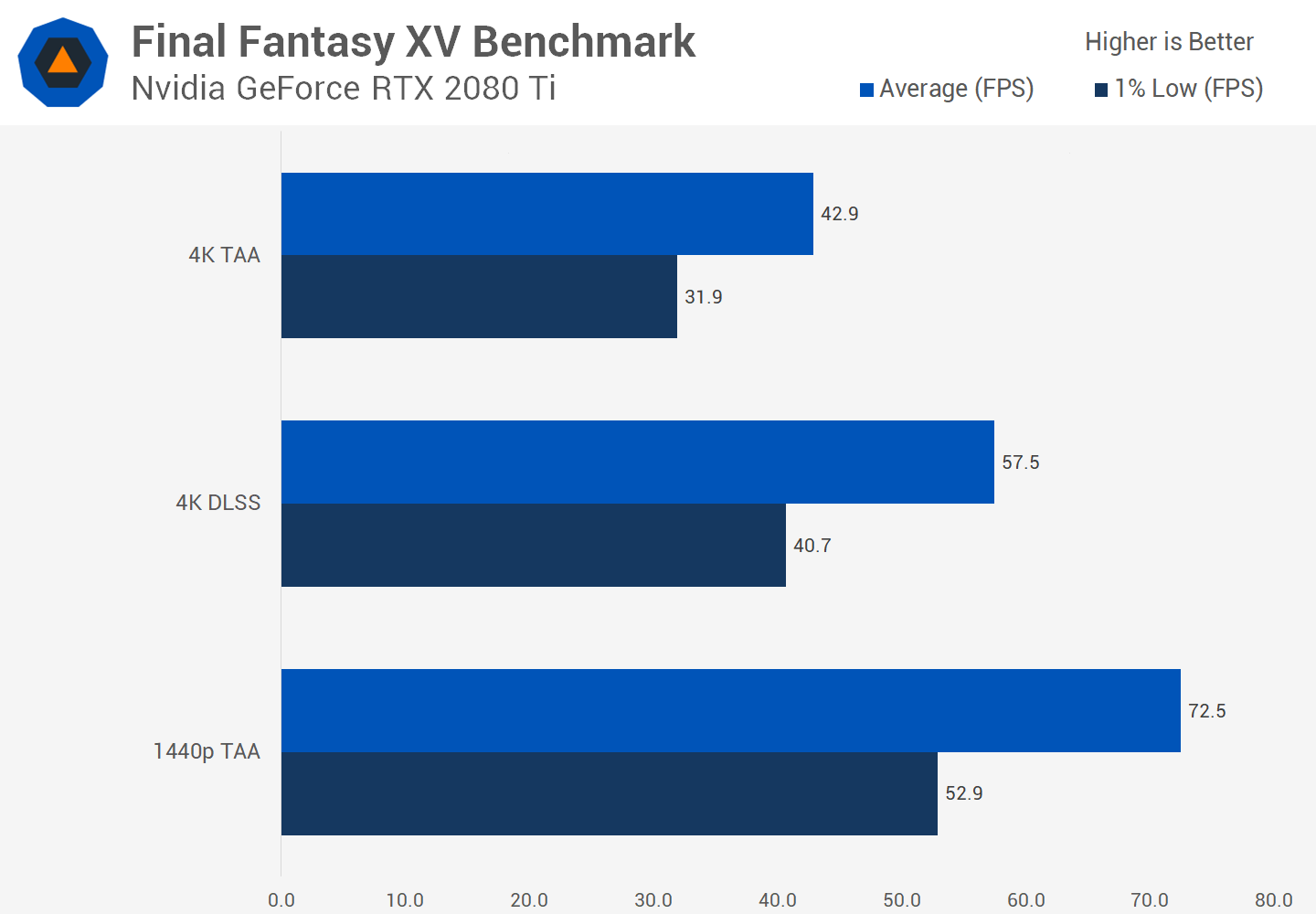

In terms of performance, well DLSS isn't a free anti-aliasing or upscaling technique, so you're not going to get 1440p-class performance while getting a near-4K presentation. For our benchmark runs of Final Fantasy XV's benchmark, DLSS improved performance by 34% looking at average framerates, and 27% looking at 1% lows, compared to native 4K. However running at a native 1440p improved things again, producing a 26% higher average framerate compared to 4K DLSS.

And I think that's a reasonable reflection of how the visual quality stacks up: DLSS is a middle ground between native 1440p and native 4K, though DLSS is closer to 4K in terms of quality, so of these three options I think it could deliver the best balance between performance and visuals. For those that are less likely to notice the quality reduction compared to native 4K, DLSS could be the way to go, but again this is only from looking at one game, we'll have to wait for actual playable games to see how it stacks up in a more real world usage scenario.

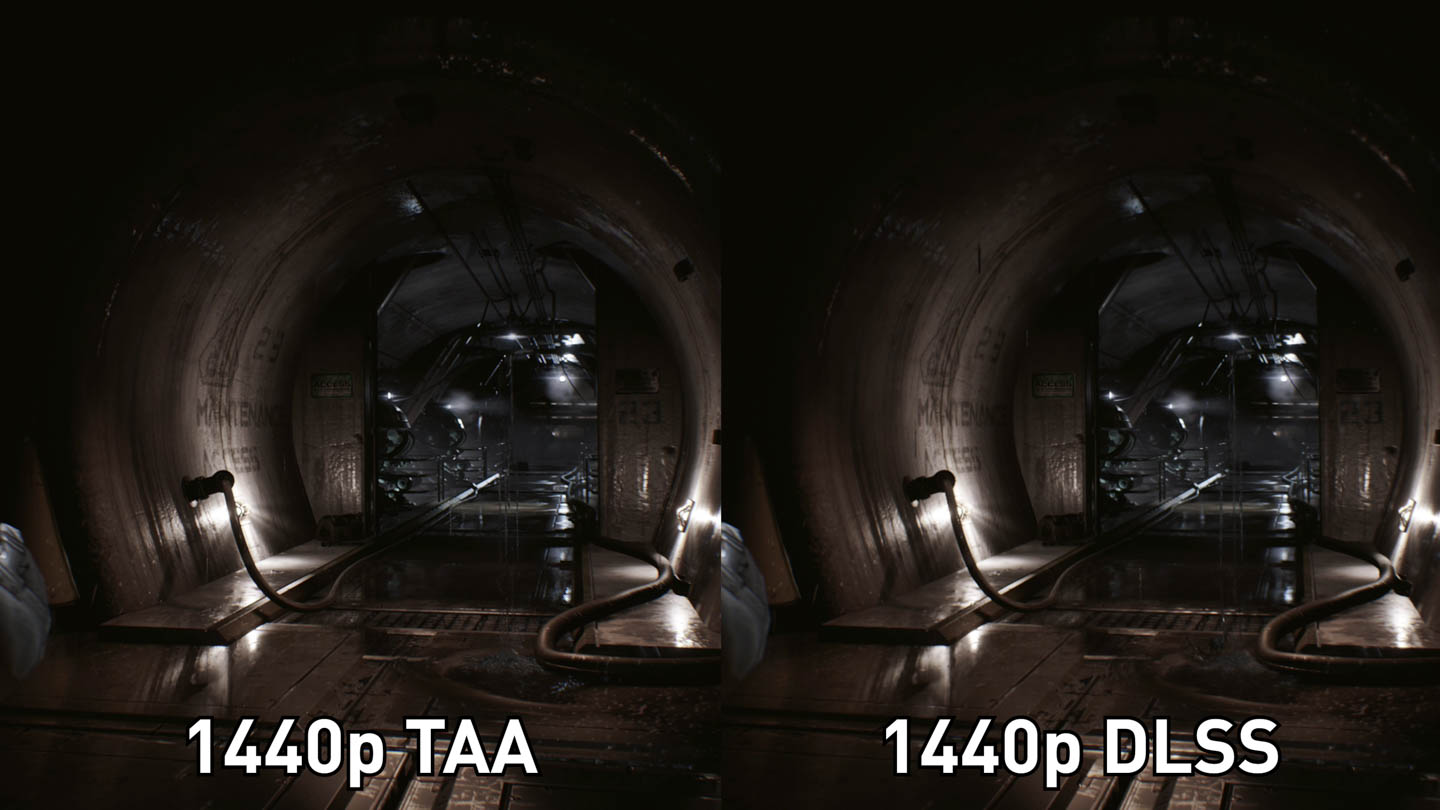

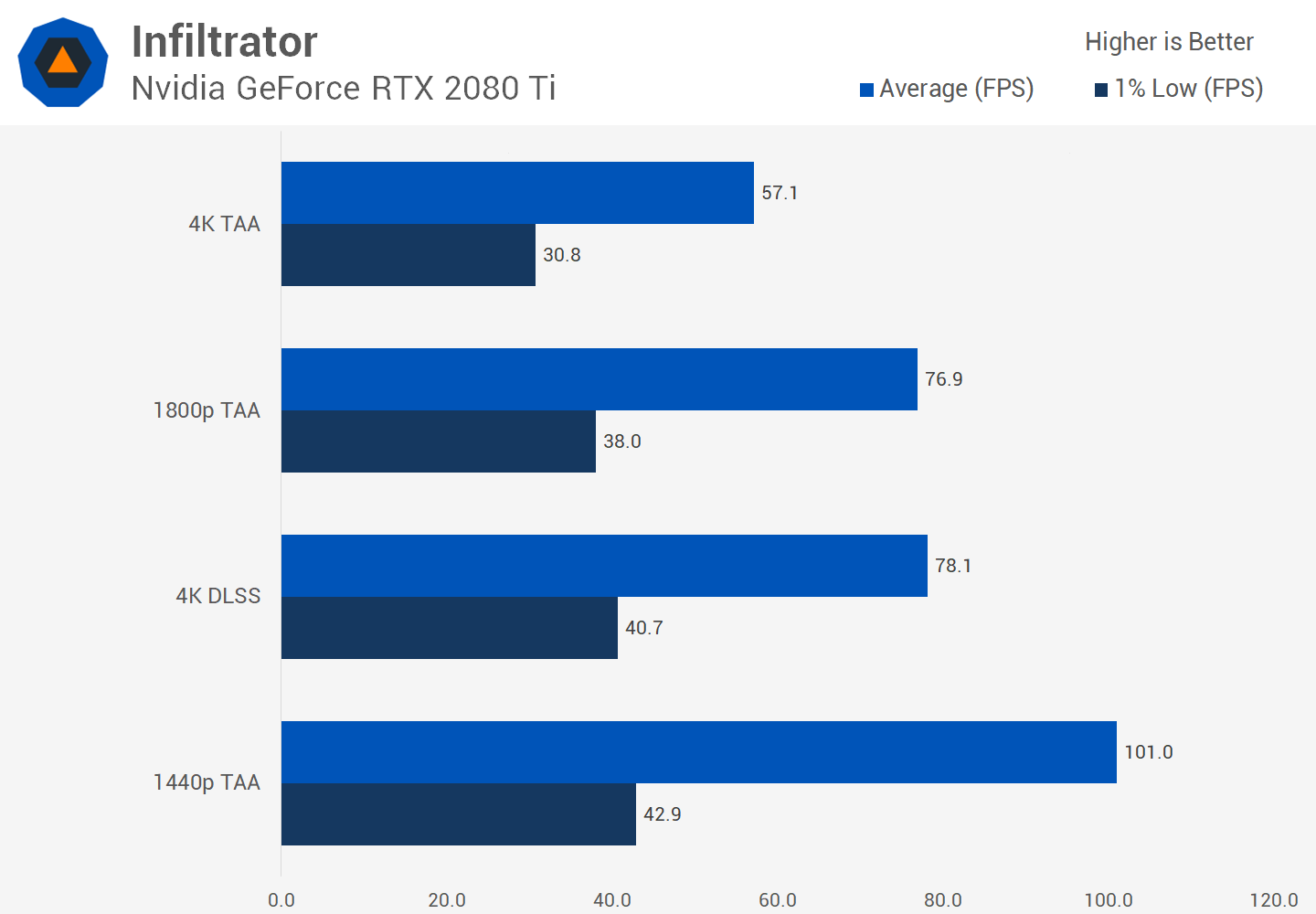

The other demo we can currently test DLSS in is Epic's Infiltrator. Pretty similar story when comparing DLSS to 4K TAA, except in this demo the TAA implementation isn't complete garbage. DLSS does clean up a few of the temporal artefacts that TAA introduces, however the 4K TAA image is sharper and clearer throughout the run, and it's particularly noticeable during the slower panning sections.

It's not a bad effort from DLSS considering it's working with a 1440p source, it does get pretty close to native 4K and in a lot of the scenes I found it pretty hard to spot any differences. However native 4K is that bit sharper, so I wouldn't call the two images completely comparable.

Again, comparing 1440p to 4K DLSS does show DLSS to be quite a bit better in terms of its clarity and overall visual quality, so DLSS is once again working some black magic to upscale the image significantly above the source 1440p material.

This is perhaps the most interesting comparison we'll see. The Infiltrator demo allows you to mess around with the resolutions a bit more than FFXV, so here I have a native 1800p image, upscaled to 4K, next to 4K DLSS.

This is a serious battle, in some situations I noticed fewer jagged edges with the upscaled 1800p image, and in some situations I noticed higher detail with the DLSS imagery. It's an incredibly close comparison; where native 4K was that little bit sharper than DLSS, 1800p is a very good match for DLSS.

And here's the fun part. Native 1800p rendering delivers nearly identical performance to DLSS in the Infiltrator demo: DLSS is about 2 percent faster in average framerates, and 6 percent faster in 1% lows. Of course, DLSS is a good 37 percent faster than 4K in average framerates, but the key thing here is DLSS is nearly visually identical to 1800p while also providing near identical performance.

Just before I jump into the conclusion I wanted to briefly touch on DLSS at 1440p, which is the lowest supported resolution for DLSS. Here, DLSS is sampling the game at 1080p then upscaling, and I think a lot of what I said about 4K DLSS vs 4K TAA holds up here as well. If anything, the difference in clarity is a little more pronounced at this resolution, in favor of native 1440p. I believe the demo is also CPU limited here with the 2080 Ti and an overclocked 8700K, so there's not much point talking performance.

Closing Remarks

There are a few interesting takeaways here from this early look at DLSS. But let's first start with the limitations. We only have two demos to go on, neither of which are games we can jump in and freely play. Instead, they are fully canned, on-rails benchmarks, and it could be easier for Nvidia to optimize their AI network for these rather than for a dynamic game. So what we're seeing here could be better DLSS image quality than in a real game output.

It's also a pretty small sample size. Final Fantasy XV is a particularly poor comparison because its stock standard anti-aliasing, TAA, is a rubbish implementation of that technology, which blurs the otherwise sharp, clear imagery you normally get with native 4K. And while Infiltrator isn't as limited in that regard, it's not an actual game.

Nvidia also provided these benchmarks in a way that makes it very difficult to test anything other than the resolutions and quality settings they want us to use. Both demos are launched using batch files with pretty much everything locked down, which is why you might have only seen DLSS vs 4K TAA comparisons up to this point.

I'd have loved to pit DLSS up against better anti-aliasing techniques but it just wasn't possible.

On face value, you look at the 4K TAA vs DLSS comparisons and it's hard not to be impressed. DLSS does provide pretty similar visual quality to native 4K, it's not quite as good, but it's close, all while giving you around 30 to 35% more performance. And compared to native 1440p, which DLSS uses for its AI-enhanced image reconstruction, this form of upscaling looks like black magic.

But dig a little deeper, and at least using the Infiltrator demo, DLSS is pretty similar in terms of both visual quality and performance, to running the demo at 1800p and then upscaling the image to 4K. Again, it's just one demo, but pouring over the footage and performance data really tempered my expectations for what to expect when DLSS comes to real world games.

After all, anyone with an older GPU, say a Pascal-based 1080 Ti, could simply run games at 1800p and get similar performance and visual quality to DLSS on an RTX 2080. That is, if real-world in-game implementations of DLSS are similar to what we saw in Infiltrator.

This is partly why I didn't want to explore DLSS fully until we had more tools at our disposal. We still don't know how DLSS compares to different AA techniques, or whether running at something like 1800p with a superior form of low-cost AA would deliver better results than DLSS. Without proper integration in real games, it's simply too early to say for sure, but going on what I've seen so far, I don't think DLSS is as revolutionary or important as Nvidia are making out.

The list of games that will support DLSS isn't exactly filled with all the hottest upcoming titles either, there are some big games in there, but there will be plenty that won't support DLSS. One less reason to upgrade to an RTX graphics card before we take a real look at ray tracing. We'll be revisiting DLSS when we can actually play games with it, hopefully that will be soon enough.